You may have heard about ChatGPT, the artificial intelligence language model that can understand and generate language. It uses machine learning to analyze and respond to questions by the users. It can give you information about a wide range of topics.

In this post, I am going to explore five different ways you can use it. Some are great, some are terrible. I hope showing you the good and the bad will help you realize what you can and cannot use the tool for. I will provide five examples, organized from worst to best use of ChatGPT.

Before we start, it is important to keep in mind that ChatGPT is a language model and not a search engine, so it is good at creating sentences that are grammatically correct, but the information may be incorrect.

1. Ask ChatGPT about specific ancestors (very bad)

You can ask ChatGPT about specific ancestors. As it is a language model, it will often be able to generate a plausible-sounding text about them.

In this example, I asked it about my ancestor Arend Kastein. The text it generated is full of errors:

- Arend was born on 1 August 1817, not 9 January 1817.

- He was not a farmer and land owner, but a police constable.

- He did not serve on the town council.

- He was not known for progressive ideas nor was he involved in the development of farming techniques in the region.

- He died on 11 April 1903, not 8 January 1903.

This shows why it is a bad idea to ask a language model to generate a text about an ancestor. It will fill in the blanks with things that sound plausible, but it does not care about the facts. This is a bad use of ChatGPT. It can lead to a lot of garbage. Some people refer to this as ChatGPT “hallucinating” which I think is an accurate description.

2. Ask ChatGPT for literature references (bad)

You can ask ChatGPT about sources or literature references for topics of interest. Here is an example where I ask it to suggest books about Haarlem in the 17th century.

I checked all five suggestions in WorldCat and none of the books exist. Some of the authors are legitimate writers about similar subjects, which is probably what prompted ChatGPT to generate these titles. ChatGPT does not care about truth, it cares about probable-sounding words.

These results are useless but are not likely to get you in trouble because you will notice the issue as soon as you actually try to consult one of these works.

3. Ask ChatGPT about historical context (meh)

You can ask ChatGPT about historical context. For example, I asked it what caused people to emigrate from the Netherlands in the 1840s.

The reply I got gave me four different angles to explore. I do not quite agree with the second point since it omits the government oppression of Christian Reformed people. While Catholics and Jews were discriminated against, I would not characterize that as persecution at that time. Another thing I do not agree with is the inclusion of Canada as a popular destination, since that did not start until the 1900s, and did not really take off until after World War II.

This example shows that you cannot just accept the answers but have to do your own further research to verify. It does give us four angles to explore further, which is useful. But the details can be wrong, which we wouldn’t know unless we did further research. This use of ChatGPT is risky, since you may not recognize the errors.

4. Ask ChatGPT about research strategies to find your ancestors (OK)

You can ask ChatGPT for strategies to use for specific research problems. For example, you can ask it to suggest records to find an occupation of an ancestor in the 1700s.

These suggestions are all valid, though not all completely accurate. For example, most marriage and burial records of churches do not have information about an individual’s occupation, though that is easy to check.

This use of ChatGPT is not risky, since you can just try the suggestions and if you do not find the occpuation, there’s no harm done. It will not lead you to make mistakes and can spark some ideas you haven’t thought of.

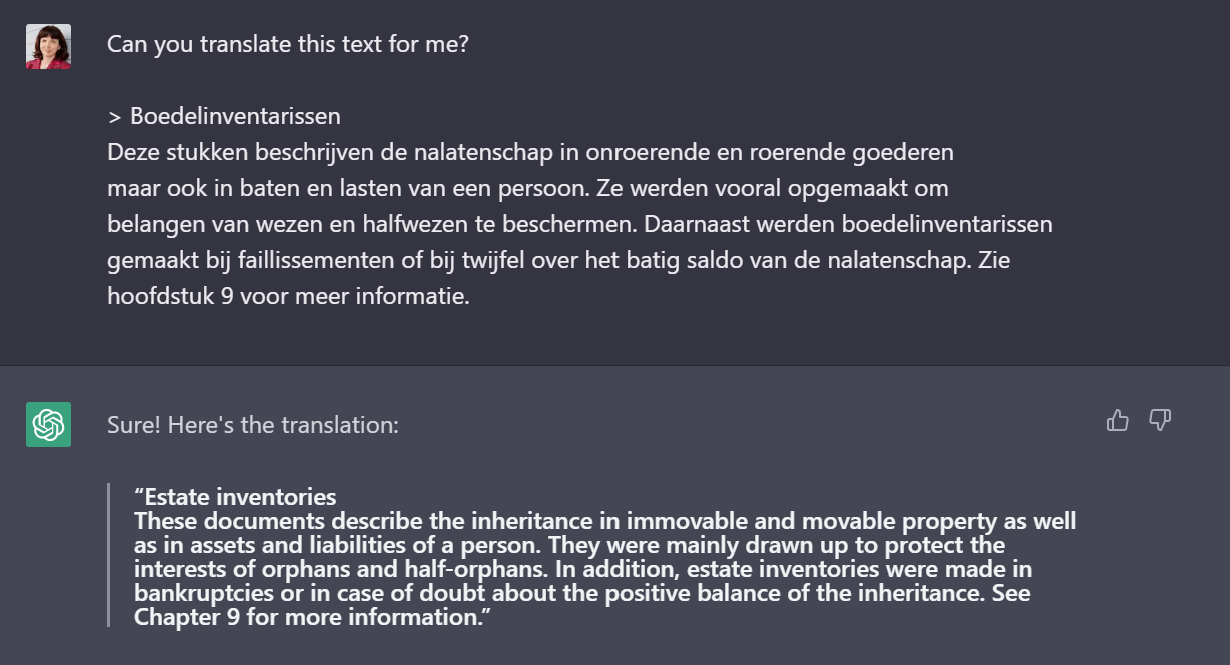

5. Ask ChatGPT to translate texts (great)

If you do find information in a language you do not know, you can use ChatGPT to translate for you. For example, if you have a Dutch text you want to translate to English, you can just write a question like “Can you translate this text to English for me” and then paste the Dutch text. Some things you can use this for:

- Transcriptions of original records

- Research guides you find on websites of archives

- Family trees

- Public domain books you find on the Internet Archive.

For example, the Groninger Archieven has published a research guide (PDF). In it you find a paragraph about “boedelinventarissen” that looks interesting but you do not understand it. You can copy the text from the PDF and ask chatGPT to translate it.

This is all about language, and this is where ChatGPT shines. I am impressed with the result. The translation is accurate and understandable. It captures all of the information in the original. This is a great way to use ChatGPT!

Conclusion

ChatGPT has enormous potential, both to be helpful and to be misleading. Like any tool, we have to learn its strengths and weaknesses so we know when to use it. It shines when we use it for things to do with language, such as translating, summarizing, editing. It can get us in trouble when we ask it to generate specific facts. ChatGPT can be useful to generate ideas, that we can then follow up or check ourselves.

How have you been using ChatGPT?

About a month ago, I made up a name “Frantisek Aktionis”. I googled the name to make sure there really was nothing online about someone with that name. Then I told ChatGPT “write a brief biography of Frantisek Aktionis”. And it did, telling me dates, places, and that he was the father of a form of art — all of which was completely made up.

At least ChatGPT has become smarter since then because now when I try this, it tells me it cannot find any information about Frantisek Aktionis.

One early use of AI was automatic translation machine. One early such machine was programmed to translate between English and Russian. As a test, it was asked to translate “The spirit was willing but the flesh was weak” into Russian and then back into English. The resultant output was “The vodka was good but the meat was bad”. I think that this example shows potential limitations of the use of AI.

I‘m using ChatGPT to analyse text like biographies. It identifies persons, events, dates and generates perfect GEDCOM.

I would like give this a shot. Can you give an example how you made this work?